- Spark Actions And Transformations

- Spark Transformations And Actions Cheat Sheet Printable

- Spark Transformation Example

- Spark Transformations And Actions Cheat Sheet Answers

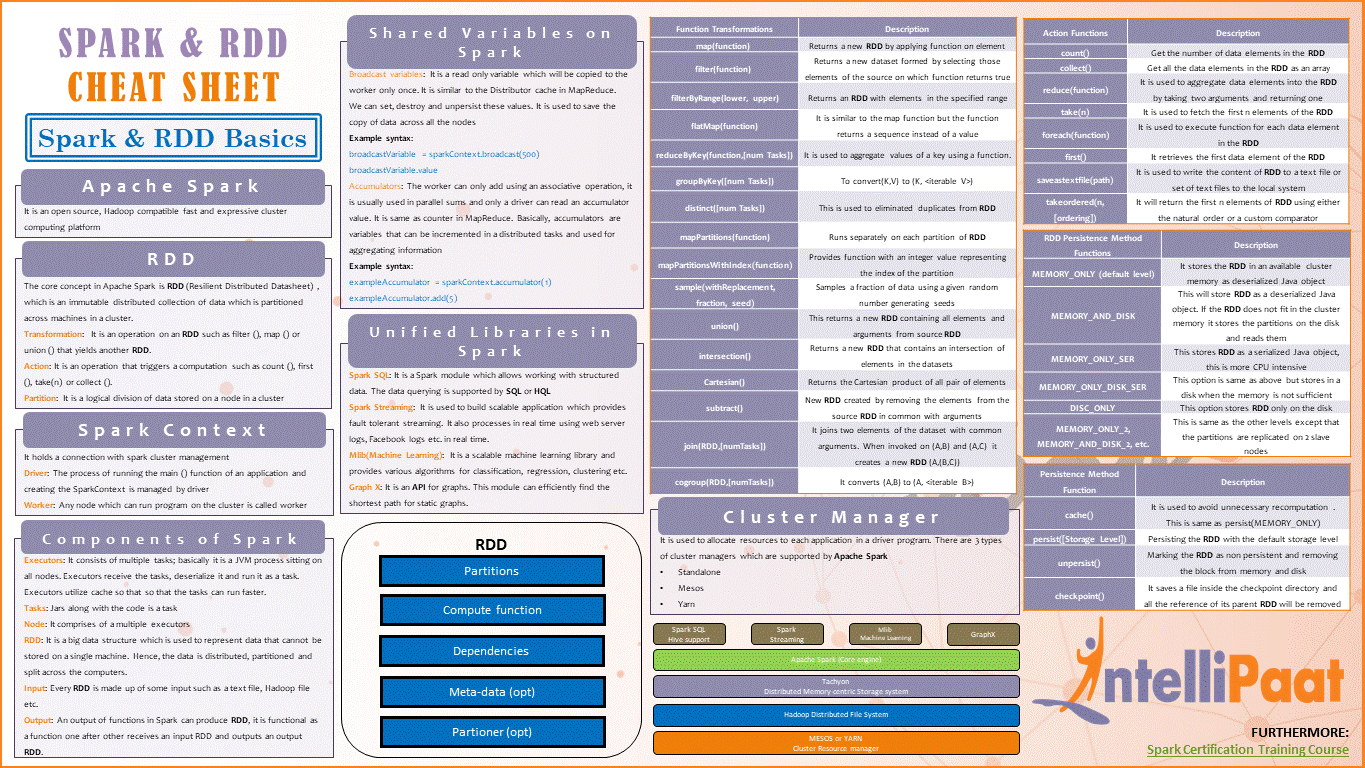

- Spark DataFrame Cheat Sheet. Cheatsheet for Apache Spark DataFrame. DataFrame is simply a type alias of DatasetRow Quick Reference val spark = SparkSession.builder.appName('Spark SQL basic example').master('local').getOrCreate // For implicit conversions like converting RDDs to DataFrames import spark.implicits. Creation.

- Spark command is a revolutionary and versatile big data engine, which can work for batch processing, real-time processing, caching data etc. Spark has a rich set of Machine Learning libraries that can enable data scientists and analytical organizations to build strong, interactive and speedy applications.

The concepts of actions and transformations in Apache Spark are not something we think about every day while using Spark, but occasionally it is good to know the distinction.

First of all, we may be asked that question during a job interview. It is a good question because it helps spot people who did not bother reading the documentation of the tool they are using. Fortunately, after reading this article, you will know the difference.

Cheat Sheet Depicting Deployment Modes And Where Each Spark Component Runs Spark Apps, Jobs, Stages and Tasks An anatomy of a Spark application usually comprises of Spark operations, which can be either transformations or actions on your data sets using Spark’s RDDs, DataFrames or Datasets APIs. Sonic hacking contest 2017 entries.

In addition to that, we may need to know the difference to explain why the code we are reviewing is too slow. For example, some data engineers have strange ideas like calculating counts in the middle of a Spark job to log the number of rows or store them as a metric. While a well-placed count may help to debug and tremendously speed up problem-solving, counting the number of rows in every other line of code is massive overkill. The difference between a transformation and an action helps us explain why doing it is a significant bottleneck. Skillet rise album download free.

What is a transformation?

A transformation is every Spark operation that returns a DataFrame, Dataset, or an RDD. When we build a chain of transformations, we add building blocks to the Spark job, but no data gets processed. That is possible because transformations are lazy executed. Spark will calculate the value when it is necessary.

Of course, this also means that Spark needs to recompute the values when we re-use the same transformations. We can avoid that by using the persist or cache functions.

What is an action?

Actions, on the other hand, are not lazily executed. When we put an action in the code and Spark reaches that line of code when running the job, it will have to perform all of the transformations that lead to that action to produce a value.

Producing value is the key concept here. While transformations return one of the Spark data types, actions return a count of elements (for example, the count function), a list of them (collect, take, etc.), or store the data in external storage (write, saveAsTextFile, and others).

Spark Actions And Transformations

How to tell the difference

When we look at the Spark API, we can easily spot the difference between transformations and actions. If a function returns a DataFrame, Dataset, or RDD, it is a transformation. If it returns anything else or does not return a value at all (or returns Unit in the case of Scala API), it is an action.

Basic data munging operations: structured data

This page is developing

| Python pandas | PySpark RDD | PySpark DF | R dplyr | Revo R dplyrXdf | |

|---|---|---|---|---|---|

| subset columns | df.colname, df['colname'] | rdd.map() | df.select('col1', 'col2', ..) | select(df, col1, col2, ..) | |

| new columns | df['newcolumn']=.. | rdd.map(function) | df.withColumn(“newcol”, content) | mutate(df, col1=col2+col3, col4=col5^2,..) | |

| subset rows | df[1:10], df.loc['rowname':] | rdd.filter(function or boolean vector), rdd.subtract() | filter | ||

| sample rows | rdd.sample() | ||||

| order rows | df.sort('col1') | arrange | |||

| group & aggregate | df.sum(axis=0), df.groupby(['A', 'B']).agg([np.mean, np.std]) | rdd.count(), rdd.countByValue(), rdd.reduce(), rdd.reduceByKey(), rdd.aggregate() | df.groupBy('col1', 'col2').count().show() | group_by(df, var1, var2,..) %>% summarise(col=func(var3), col2=func(var4), ..) | rxSummary(formula, df)or group_by() %>% summarise() |

| peek at data | df.head() | rdd.take(5) | df.show(5) | first(), last() | |

| quick statistics | df.describe() | df.describe() | summary() | rxGetVarInfo() | |

| schema or structure | df.printSchema() |

..and there's always SQL

Syntax examples

Python pandas

PySpark RDDs & DataFrames

RDDs

Transformations return pointers to new RDDs

- map, flatmap: flexible,

- reduceByKey

- filter

Gerrit tutorial. Actions return values

- collect

- reduce: for cumulative aggregation

- take, count

A reminder: how lambda functions, map, reduce and filter work

Partitions: rdd.getNumPartitions(), sc.parallelize(data, 500), sc.textFile('file.csv', 500), rdd.repartition(500)

Spark Transformations And Actions Cheat Sheet Printable

Additional functions for DataFrames

If you want to use an RDD method on a dataframe, you can often df.rdd.function().

Miscellaneous examples of chained data munging:

Further resources

- My IPyNB scrapbook of Spark notes

- Spark programming guide (latest)

- Spark programming guide (1.3)

- Introduction to Spark illustrates how python functions like map & reduce work and how they translate into Spark, plus may data munging examples in Pandas and then Spark

R dplyr

The 5 verbs:

Spark Transformation Example

- select = subset columns

- mutate = new cols

- filter = subset rows

- arrange = reorder rows

- summarise

Additional functions in dplyr

- first(x) - The first element of vector x.

- last(x) - The last element of vector x.

- nth(x, n) - The nth element of vector x.

- n() - The number of rows in the data.frame or group of observations that summarise() describes.

- n_distinct(x) - The number of unique values in vector x.

Revo R dplyrXdf

Notes:

Spark Transformations And Actions Cheat Sheet Answers

- xdf = 'external dataframe' or distributed one in, say, a Teradata database

- If necessary, transformations can be done using

rxDataStep(transforms=list(..))

Manipulation with dplyrXdf can use:

- filter, select, distinct, transmute, mutate, arrange, rename,

- group_by, summarise, do

- left_join, right_join, full_join, inner_join

- these functions supported by rx: sum, n, mean, sd, var, min, max

Further resources